TMVA::OpenFile ..

Your report is a bit short. Do you have a macro reproducing this problem ?

@moneta may help once you proved more details.

It crashes in OpenFile … does the file you are trying you open exists ?

#0 0x00007f510e6bedba in __GI___wait4 (pid=7219, stat_loc=stat_loc

entry=0x7ffe3862bea8, options=options

entry=0, usage=usage

entry=0x0) at ../sysdeps/unix/sysv/linux/wait4.c:27

#1 0x00007f510e6bed7b in __GI___waitpid (pid=<optimized out>, stat_loc=stat_loc

entry=0x7ffe3862bea8, options=options

entry=0) at waitpid.c:38

#2 0x00007f510e62e0e7 in do_system (line=<optimized out>) at ../sysdeps/posix/system.c:172

#3 0x00007f510ece580e in TUnixSystem::StackTrace() () from /home/rithish/root/buildroot/lib/libCore.so

#4 0x00007f510ece2695 in TUnixSystem::DispatchSignals(ESignals) () from /home/rithish/root/buildroot/lib/libCore.so

#5 <signal handler called>

#6 0x00007f50fb5d7800 in TMVA::TMVAGlob::OpenFile(TString const&) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#7 0x00007f50fb5bd35d in TMVA::correlations(TString, TString, bool, bool, bool) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#8 0x00007f50fc866091 in ?? ()

#9 0x0000000000000000 in ?? ()

===========================================================

The lines below might hint at the cause of the crash.

You may get help by asking at the ROOT forum http://root.cern.ch/forum

Only if you are really convinced it is a bug in ROOT then please submit a

report at http://root.cern.ch/bugs Please post the ENTIRE stack trace

from above as an attachment in addition to anything else

that might help us fixing this issue.

===========================================================

#6 0x00007f50fb5d7800 in TMVA::TMVAGlob::OpenFile(TString const&) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#7 0x00007f50fb5bd35d in TMVA::correlations(TString, TString, bool, bool, bool) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#8 0x00007f50fc866091 in ?? ()

#9 0x0000000000000000 in ?? ()

=========================================

This is the example macro present in TMVA tutorials. The following is the code for that . when i click the tab the graph is not showing up.

/// \file

/// \ingroup tutorial_tmva

/// \notebook -nodraw

/// This macro provides examples for the training and testing of the

/// TMVA classifiers.

///

/// As input data is used a toy-MC sample consisting of four Gaussian-distributed

/// and linearly correlated input variables.

///

/// The methods to be used can be switched on and off by means of booleans, or

/// via the prompt command, for example:

///

/// root -l TMVARegression.C\(\"LD,MLP\"\)

///

/// (note that the backslashes are mandatory)

/// If no method given, a default set is used.

///

/// The output file "TMVAReg.root" can be analysed with the use of dedicated

/// macros (simply say: root -l <macro.C>), which can be conveniently

/// invoked through a GUI that will appear at the end of the run of this macro.

/// - Project : TMVA - a Root-integrated toolkit for multivariate data analysis

/// - Package : TMVA

/// - Root Macro: TMVARegression

///

/// \macro_output

/// \macro_code

/// \author Andreas Hoecker

#include <cstdlib>

#include <iostream>

#include <map>

#include <string>

#include "TChain.h"

#include "TFile.h"

#include "TTree.h"

#include "TString.h"

#include "TObjString.h"

#include "TSystem.h"

#include "TROOT.h"

#include "TMVA/Tools.h"

#include "TMVA/Factory.h"

#include "TMVA/DataLoader.h"

#include "TMVA/TMVARegGui.h"

using namespace TMVA;

void TMVARegression( TString myMethodList = "" )

{

// The explicit loading of the shared libTMVA is done in TMVAlogon.C, defined in .rootrc

// if you use your private .rootrc, or run from a different directory, please copy the

// corresponding lines from .rootrc

// methods to be processed can be given as an argument; use format:

//

// mylinux~> root -l TMVARegression.C\(\"myMethod1,myMethod2,myMethod3\"\)

//

//---------------------------------------------------------------

// This loads the library

TMVA::Tools::Instance();

// Default MVA methods to be trained + tested

std::map<std::string,int> Use;

// Mutidimensional likelihood and Nearest-Neighbour methods

Use["PDERS"] = 0;

Use["PDEFoam"] = 1;

Use["KNN"] = 1;

//

// Linear Discriminant Analysis

Use["LD"] = 1;

//

// Function Discriminant analysis

Use["FDA_GA"] = 0;

Use["FDA_MC"] = 0;

Use["FDA_MT"] = 0;

Use["FDA_GAMT"] = 0;

//

// Neural Network

Use["MLP"] = 0;

#ifdef R__HAS_TMVACPU

Use["DNN_CPU"] = 1;

#else

Use["DNN_CPU"] = 0;

#endif

//

// Support Vector Machine

Use["SVM"] = 0;

//

// Boosted Decision Trees

Use["BDT"] = 0;

Use["BDTG"] = 1;

// ---------------------------------------------------------------

std::cout << std::endl;

std::cout << "==> Start TMVARegression" << std::endl;

// Select methods (don't look at this code - not of interest)

if (myMethodList != "") {

for (std::map<std::string,int>::iterator it = Use.begin(); it != Use.end(); it++) it->second = 0;

std::vector<TString> mlist = gTools().SplitString( myMethodList, ',' );

for (UInt_t i=0; i<mlist.size(); i++) {

std::string regMethod(mlist[i].Data());

if (Use.find(regMethod) == Use.end()) {

std::cout << "Method \"" << regMethod << "\" not known in TMVA under this name. Choose among the following:" << std::endl;

for (std::map<std::string,int>::iterator it = Use.begin(); it != Use.end(); it++) std::cout << it->first << " ";

std::cout << std::endl;

return;

}

Use[regMethod] = 1;

}

}

// --------------------------------------------------------------------------------------------------

// Here the preparation phase begins

// Create a new root output file

TString outfileName( "TMVAReg.root" );

TFile* outputFile = TFile::Open( outfileName, "RECREATE" );

// Create the factory object. Later you can choose the methods

// whose performance you'd like to investigate. The factory will

// then run the performance analysis for you.

//

// The first argument is the base of the name of all the

// weightfiles in the directory weight/

//

// The second argument is the output file for the training results

// All TMVA output can be suppressed by removing the "!" (not) in

// front of the "Silent" argument in the option string

TMVA::Factory *factory = new TMVA::Factory( "TMVARegression", outputFile,

"!V:!Silent:Color:DrawProgressBar:AnalysisType=Regression" );

TMVA::DataLoader *dataloader=new TMVA::DataLoader("dataset");

// If you wish to modify default settings

// (please check "src/Config.h" to see all available global options)

//

// (TMVA::gConfig().GetVariablePlotting()).fTimesRMS = 8.0;

// (TMVA::gConfig().GetIONames()).fWeightFileDir = "myWeightDirectory";

// Define the input variables that shall be used for the MVA training

// note that you may also use variable expressions, such as: "3*var1/var2*abs(var3)"

// [all types of expressions that can also be parsed by TTree::Draw( "expression" )]

dataloader->AddVariable( "var1", "Variable 1", "units", 'F' );

dataloader->AddVariable( "var2", "Variable 2", "units", 'F' );

// You can add so-called "Spectator variables", which are not used in the MVA training,

// but will appear in the final "TestTree" produced by TMVA. This TestTree will contain the

// input variables, the response values of all trained MVAs, and the spectator variables

dataloader->AddSpectator( "spec1:=var1*2", "Spectator 1", "units", 'F' );

dataloader->AddSpectator( "spec2:=var1*3", "Spectator 2", "units", 'F' );

// Add the variable carrying the regression target

dataloader->AddTarget( "fvalue" );

// It is also possible to declare additional targets for multi-dimensional regression, ie:

// factory->AddTarget( "fvalue2" );

// BUT: this is currently ONLY implemented for MLP

// Read training and test data (see TMVAClassification for reading ASCII files)

// load the signal and background event samples from ROOT trees

TFile *input(0);

TString fname = "./tmva_reg_example.root";

if (!gSystem->AccessPathName( fname )) {

input = TFile::Open( fname ); // check if file in local directory exists

}

else {

TFile::SetCacheFileDir(".");

input = TFile::Open("http://root.cern.ch/files/tmva_reg_example.root", "CACHEREAD"); // if not: download from ROOT server

}

if (!input) {

std::cout << "ERROR: could not open data file" << std::endl;

exit(1);

}

std::cout << "--- TMVARegression : Using input file: " << input->GetName() << std::endl;

// Register the regression tree

TTree *regTree = (TTree*)input->Get("TreeR");

// global event weights per tree (see below for setting event-wise weights)

Double_t regWeight = 1.0;

// You can add an arbitrary number of regression trees

dataloader->AddRegressionTree( regTree, regWeight );

// This would set individual event weights (the variables defined in the

// expression need to exist in the original TTree)

dataloader->SetWeightExpression( "var1", "Regression" );

// Apply additional cuts on the signal and background samples (can be different)

TCut mycut = ""; // for example: TCut mycut = "abs(var1)<0.5 && abs(var2-0.5)<1";

// tell the DataLoader to use all remaining events in the trees after training for testing:

dataloader->PrepareTrainingAndTestTree( mycut,

"nTrain_Regression=1000:nTest_Regression=0:SplitMode=Random:NormMode=NumEvents:!V" );

//

// dataloader->PrepareTrainingAndTestTree( mycut,

// "nTrain_Regression=0:nTest_Regression=0:SplitMode=Random:NormMode=NumEvents:!V" );

// If no numbers of events are given, half of the events in the tree are used

// for training, and the other half for testing:

//

// dataloader->PrepareTrainingAndTestTree( mycut, "SplitMode=random:!V" );

// Book MVA methods

//

// Please lookup the various method configuration options in the corresponding cxx files, eg:

// src/MethoCuts.cxx, etc, or here: http://tmva.sourceforge.net/optionRef.html

// it is possible to preset ranges in the option string in which the cut optimisation should be done:

// "...:CutRangeMin[2]=-1:CutRangeMax[2]=1"...", where [2] is the third input variable

// PDE - RS method

if (Use["PDERS"])

factory->BookMethod( dataloader, TMVA::Types::kPDERS, "PDERS",

"!H:!V:NormTree=T:VolumeRangeMode=Adaptive:KernelEstimator=Gauss:GaussSigma=0.3:NEventsMin=40:NEventsMax=60:VarTransform=None" );

// And the options strings for the MinMax and RMS methods, respectively:

//

// "!H:!V:VolumeRangeMode=MinMax:DeltaFrac=0.2:KernelEstimator=Gauss:GaussSigma=0.3" );

// "!H:!V:VolumeRangeMode=RMS:DeltaFrac=3:KernelEstimator=Gauss:GaussSigma=0.3" );

if (Use["PDEFoam"])

factory->BookMethod( dataloader, TMVA::Types::kPDEFoam, "PDEFoam",

"!H:!V:MultiTargetRegression=F:TargetSelection=Mpv:TailCut=0.001:VolFrac=0.0666:nActiveCells=500:nSampl=2000:nBin=5:Compress=T:Kernel=None:Nmin=10:VarTransform=None" );

// K-Nearest Neighbour classifier (KNN)

if (Use["KNN"])

factory->BookMethod( dataloader, TMVA::Types::kKNN, "KNN",

"nkNN=20:ScaleFrac=0.8:SigmaFact=1.0:Kernel=Gaus:UseKernel=F:UseWeight=T:!Trim" );

// Linear discriminant

if (Use["LD"])

factory->BookMethod( dataloader, TMVA::Types::kLD, "LD",

"!H:!V:VarTransform=None" );

// Function discrimination analysis (FDA) -- test of various fitters - the recommended one is Minuit (or GA or SA)

if (Use["FDA_MC"])

factory->BookMethod( dataloader, TMVA::Types::kFDA, "FDA_MC",

"!H:!V:Formula=(0)+(1)*x0+(2)*x1:ParRanges=(-100,100);(-100,100);(-100,100):FitMethod=MC:SampleSize=100000:Sigma=0.1:VarTransform=D" );

if (Use["FDA_GA"]) // can also use Simulated Annealing (SA) algorithm (see Cuts_SA options) .. the formula of this example is good for parabolas

factory->BookMethod( dataloader, TMVA::Types::kFDA, "FDA_GA",

"!H:!V:Formula=(0)+(1)*x0+(2)*x1:ParRanges=(-100,100);(-100,100);(-100,100):FitMethod=GA:PopSize=100:Cycles=3:Steps=30:Trim=True:SaveBestGen=1:VarTransform=Norm" );

if (Use["FDA_MT"])

factory->BookMethod( dataloader, TMVA::Types::kFDA, "FDA_MT",

"!H:!V:Formula=(0)+(1)*x0+(2)*x1:ParRanges=(-100,100);(-100,100);(-100,100);(-10,10):FitMethod=MINUIT:ErrorLevel=1:PrintLevel=-1:FitStrategy=2:UseImprove:UseMinos:SetBatch" );

if (Use["FDA_GAMT"])

factory->BookMethod( dataloader, TMVA::Types::kFDA, "FDA_GAMT",

"!H:!V:Formula=(0)+(1)*x0+(2)*x1:ParRanges=(-100,100);(-100,100);(-100,100):FitMethod=GA:Converger=MINUIT:ErrorLevel=1:PrintLevel=-1:FitStrategy=0:!UseImprove:!UseMinos:SetBatch:Cycles=1:PopSize=5:Steps=5:Trim" );

// Neural network (MLP)

if (Use["MLP"])

factory->BookMethod( dataloader, TMVA::Types::kMLP, "MLP", "!H:!V:VarTransform=Norm:NeuronType=tanh:NCycles=20000:HiddenLayers=N+20:TestRate=6:TrainingMethod=BFGS:Sampling=0.3:SamplingEpoch=0.8:ConvergenceImprove=1e-6:ConvergenceTests=15:!UseRegulator" );

if (Use["DNN_CPU"]) {

TString layoutString("Layout=TANH|50,TANH|50,TANH|50,LINEAR");

TString trainingStrategyString("TrainingStrategy=");

trainingStrategyString +="LearningRate=1e-3,Momentum=0.3,ConvergenceSteps=20,BatchSize=50,TestRepetitions=1,WeightDecay=0.0,Regularization=None,Optimizer=Adam";

TString nnOptions("!H:V:ErrorStrategy=SUMOFSQUARES:VarTransform=G:WeightInitialization=XAVIERUNIFORM:Architecture=CPU");

nnOptions.Append(":");

nnOptions.Append(layoutString);

nnOptions.Append(":");

nnOptions.Append(trainingStrategyString);

factory->BookMethod(dataloader, TMVA::Types::kDL, "DNN_CPU", nnOptions); // NN

}

// Support Vector Machine

if (Use["SVM"])

factory->BookMethod( dataloader, TMVA::Types::kSVM, "SVM", "Gamma=0.25:Tol=0.001:VarTransform=Norm" );

// Boosted Decision Trees

if (Use["BDT"])

factory->BookMethod( dataloader, TMVA::Types::kBDT, "BDT",

"!H:!V:NTrees=100:MinNodeSize=1.0%:BoostType=AdaBoostR2:SeparationType=RegressionVariance:nCuts=20:PruneMethod=CostComplexity:PruneStrength=30" );

if (Use["BDTG"])

factory->BookMethod( dataloader, TMVA::Types::kBDT, "BDTG",

"!H:!V:NTrees=2000::BoostType=Grad:Shrinkage=0.1:UseBaggedBoost:BaggedSampleFraction=0.5:nCuts=20:MaxDepth=3:MaxDepth=4" );

// --------------------------------------------------------------------------------------------------

// Now you can tell the factory to train, test, and evaluate the MVAs

// Train MVAs using the set of training events

factory->TrainAllMethods();

// Evaluate all MVAs using the set of test events

factory->TestAllMethods();

// Evaluate and compare performance of all configured MVAs

factory->EvaluateAllMethods();

// --------------------------------------------------------------

// Save the output

outputFile->Close();

std::cout << "==> Wrote root file: " << outputFile->GetName() << std::endl;

std::cout << "==> TMVARegression is done!" << std::endl;

delete factory;

delete dataloader;

// Launch the GUI for the root macros

if (!gROOT->IsBatch()) TMVA::TMVARegGui( outfileName );

}

int main( int argc, char** argv )

{

// Select methods (don't look at this code - not of interest)

TString methodList;

for (int i=1; i<argc; i++) {

TString regMethod(argv[i]);

if(regMethod=="-b" || regMethod=="--batch") continue;

if (!methodList.IsNull()) methodList += TString(",");

methodList += regMethod;

}

TMVARegression(methodList);

return 0;

}

Please read tips for efficient and successful posting and posting code

And please specify the ROOT version, platform, and compiler, and how did you install ROOT?

Hi bellenot

ROOT VERSION 6.22.02 ,

ubuntu 20.04 is my operating system

I installed root from source directory

Thanks. So maybe @moneta can give some hints

Hi,

To understand the problem better, is the crash in the TMVARegGUI when opening the output file ?

If this is the case, can you please share the file, e…g. posting a public link ?

Thank you

Lorenzo

Dear Dr. Moneto @moneta

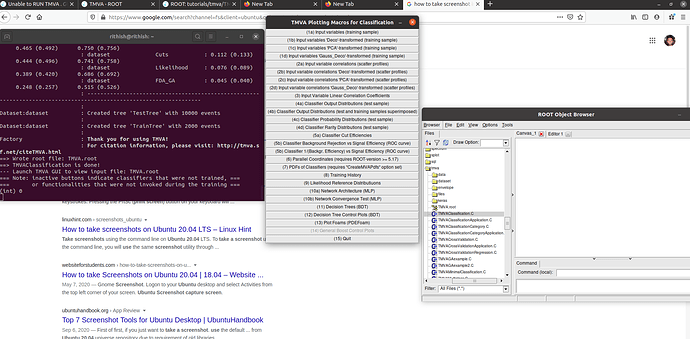

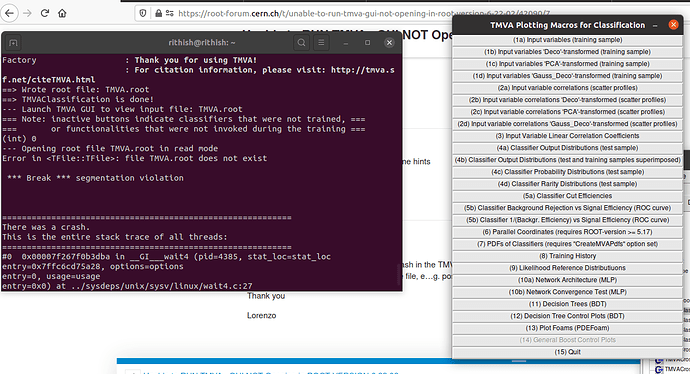

The real problem is in executing a TMVA tutorial file named as TMVA regression.C and also all macros file present in the tmva tutorials directory. After executing the code the GUI pops up. But when i click on the buttons in the TMVA GUI, I expect a graph to pop up as im running a file which has been predefined.

The following is the problem. This crash happens to all the files present in the tmva tutorials folder for example. After executing TMVA classification .C from the tutorials folder and the web link is “https://root.cern/doc/v622/TMVAClassification_8C.html” . The following crash happens.

"There was a crash.

This is the entire stack trace of all threads:

#0 0x00007f267f0b3dba in __GI___wait4 (pid=4385, stat_loc=stat_loc

entry=0x7ffc6cd75a28, options=options

entry=0, usage=usage

entry=0x0) at …/sysdeps/unix/sysv/linux/wait4.c:27

#1 0x00007f267f0b3d7b in __GI___waitpid (pid=, stat_loc=stat_loc

entry=0x7ffc6cd75a28, options=options

entry=0) at waitpid.c:38

#2 0x00007f267f0230e7 in do_system (line=) at …/sysdeps/posix/system.c:172

#3 0x00007f267f6da80e in TUnixSystem::StackTrace() () from /home/rithish/root/buildroot/lib/libCore.so

#4 0x00007f267f6d7695 in TUnixSystem::DispatchSignals(ESignals) () from /home/rithish/root/buildroot/lib/libCore.so

#5

#6 0x00007f266bfbf800 in TMVA::TMVAGlob::OpenFile(TString const&) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#7 0x00007f266bfc4eea in TMVA::variables(TString, TString, TString, TString, bool, bool) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#8 0x00007f266d2590cc in ?? ()

#9 0x0000000000000025 in ?? ()

#10 0x0000000000000000 in ?? ()

The lines below might hint at the cause of the crash.

You may get help by asking at the ROOT forum http://root.cern.ch/forum

Only if you are really convinced it is a bug in ROOT then please submit a

report at http://root.cern.ch/bugs Please post the ENTIRE stack trace

from above as an attachment in addition to anything else

that might help us fixing this issue.

#6 0x00007f266bfbf800 in TMVA::TMVAGlob::OpenFile(TString const&) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#7 0x00007f266bfc4eea in TMVA::variables(TString, TString, TString, TString, bool, bool) () from /home/rithish/root/buildroot/lib/libTMVAGui.so

#8 0x00007f266d2590cc in ?? ()

#9 0x0000000000000025 in ?? ()

#10 0x0000000000000000 in ?? ()

"

Kindly take a look at the second screenshot. When i click a tab in the GUI there is a error saying TMVA root file doesnt exist but actually it us there in the TMVA tutorials folder. I have a suspect about the lastest version of root 6.22.02 which im using. Or did i make any silly mistake while building root from source file? Kindly help me witht the same

Dear @moneta

I found where i was going wrong i was runing the macros inside root. For example “root -l ./TMVAClassification.C("Fisher,Likelihood")”. But if i run the same directly from terminal its not getting crashed. Can you tell me why this happens?

Hi,

It is probably an issue on the location of the outputfile and this depends from where you run the macro and then run the GUI

Lorenzo