Dear All,

I have been recently using the RooStats::MCMCCalculator class to calculate an upper limit, using a model with one parameter of interest and several nuisance parameters, 11 to be specific. I realised that in the implementation of the Metropolis-Hastings algorithm that the class is using, not all the steps are saved in the Markov chain. Instead, only the points that were accepted by the algorithm are added to the chain. This becomes quite obvious when you compare the number of steps of the final Markov Chain and the number of iterations that were set initially. Also, I looked into the implementation file of the class (https://root.cern.ch/doc/v606/MetropolisHastings_8cxx_source.html) and I’m convinced now that the rejected events are not stored.

Is this correct? My understanding about the Metropolis-Hastings algorithm is that in the final step of the algorithm you either accept the candidate point, add it to the chain and move there or you stay at the current point, but you also add this point to the chain so that it is counted TWICE. Saving these extra points may be a problem at the beginning of the chain, when you haven’t reached a stable regime yet, but that’s why the burn-in steps are for.

Are you aware of this point? If so, could anyone explain what is the reason behind implementing the Metropolis-Hastings algorithm in this particular way? Has this algorithm been revised in future versions of ROOT? (I’m using 5.34.20).

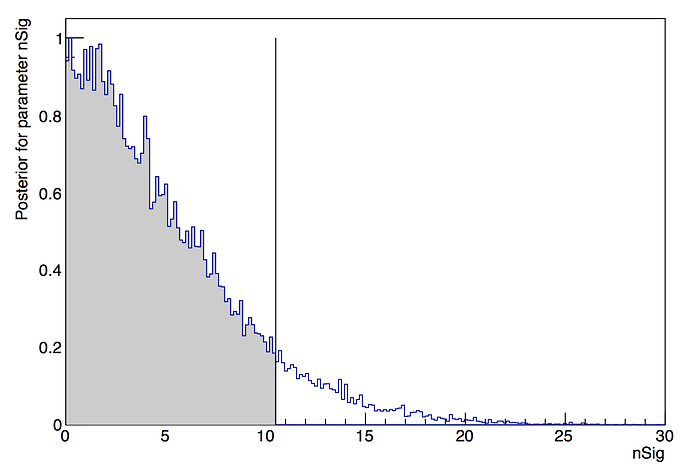

I’m not sure if it is related, but in my analysis I haven’t managed yet to get a smooth posterior distribution, even for a large number of iterations. As an example, I attach the posterior distribution that I obtain as a function of the POI for one million number of iterations, and as you can see the distribution has still some noticeable fluctuations.

Many thanks,

Ibles