Hello everyone,

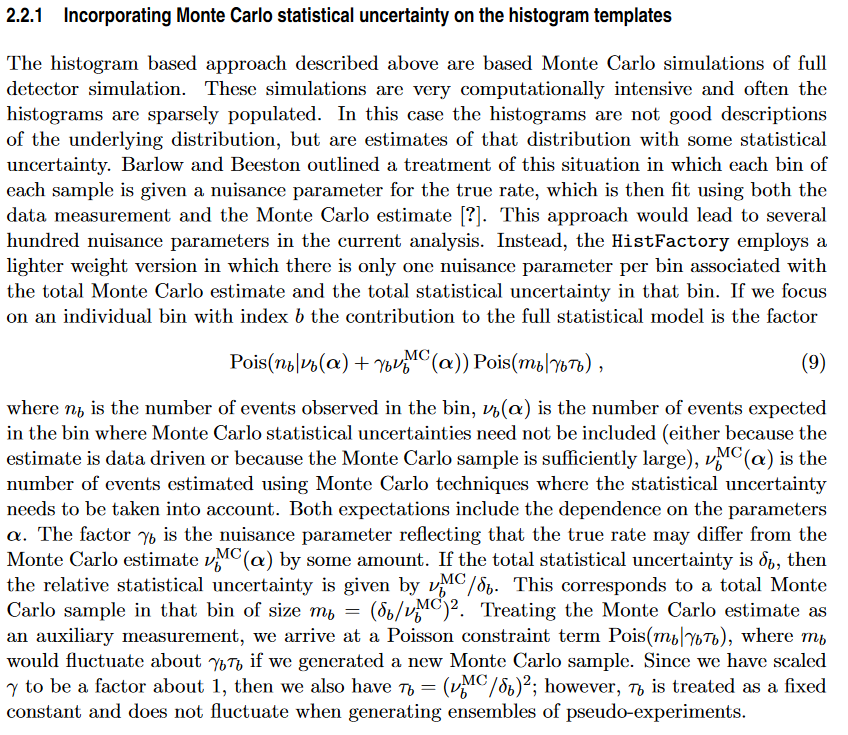

I have been trying to understand the lite version of the Beeston-Barlow method, that allows to take into account the limited statistics of the MC samples the fit templates are built from directly during the fit. This method is explained with precision in the following preprint article, in section 2.2.1 (an image is attached below). However, I’m still struggling to understand some piece of notation that were used, even though some of them were partially answered in this topic that dates back to 2018.

In the lite method, instead of varying the bin counts of each sample, this is done globally for all the MC samples. This means only one bin-wise scale factor gamma_b per bin b is defined, and is somehow constrained to vary under the overall MC statistical uncertainty.

Here are a list of questions I unfortunately can’t get past

-

sigma_bis defined as the total statistical uncertainty. Is it the total statistical uncertainty of the MC samples stacked together? So is it justsqrt(uncertainty_bin_b_sample_1^2 + uncertainty_bin_b_sample_2^2 + ...), whereuncertainty_bin_b_sample_1is either

-

sqrt(counts in bin b for the sample 1)for an unweighted sample, -

sqrt(sum of squared weights for events belonging the sample 1 and bin b)for a weighted sample

Is that right? Or does the computation of sigma_b also involves the yields of their templates in the fit?

-

nu_b^MCis, from my understanding, just the amount of observed events in the binbthat are modeled by MC samples, so this is justsum over the samples s [(counts in bin b in sample s) / (total number of events in the sample s) * yield of the component s found by the fit]. -

tau_b(which, I guess, is supposed to represent the “statistical power” of the MC samples in the bin b) is computed as(vu_b^MC / delta_b)^2, and is “treated as a fixed constant and does not fluctuate when generating ensembles of pseudoexperiments”. Does this mean thattau_b^MCdoes not change during the fit, sonu_b^MCis computed at the start of the fit according the starting point of the fit, and is then unchanged during the fit? -

Finally, despite the explanations in the 2018 topic, I do not understand why

gamma tau_b = gamma (nu_b^MC/delta_b)^2is the quantity that is constrained, and that it is the size of total monte carlo sample in that bin.nu_b^MCanddelta_bseem to be unrelated quantites, asnu_b^MC, on the one hand, is predicted by the fit, and is solely related to the data that is being fitted. On the other hand,delta_bseems to be only related to the MC statistics.

I’m sorry for such a long message, with so many questions, and if don’t understand something that is completely obvious or annoying to explain, or if my message is confusing.

Thanks for any answers,

Anthony.

PS: I’ve been trying to understand how this method works in order to use it together with the python library zfit. I know that another python library, pyhf, closely related to HistFactory, has implemented the lite Beeston-Barlow method, but with Gaussian constraint. The way to do it with Poisson constraint using their framework is still unclear to me.

…

"Normal" Beeston-Barlow method

The “normal” Beeston-Barlow method seems clear to me, it is very well explained in the original 1993 paper. In brief, the number of generated candidates for each bin of each MC sample is Poisson-constrained, instead of just being a constant. This way, if the MC sample does not contain enough statistics to represent well enough the true underlying PDF (Probability Density Function), the bin counts for each sample can slighly accomodate. Then you can either minimise the overall likelihood or equivalently solve some equations iteratively).

In, this paper, the factor needed to normalise the histogram and scale the template are explicitely given in equation (1). The parameters of the constrained terms look also easier to compute and understand because you do not need to combine the statistical uncertainty of different MC samples that can appear with different (possibly varying) yields in the fit.