Hi,

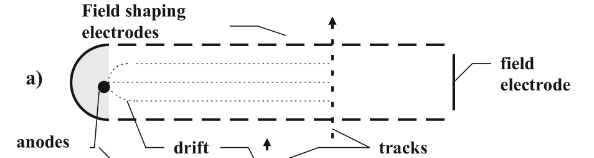

I’m simulating a Drift Chamber and use Comsol as a field solver and adapted the Comsol example. 100 muons passing through the chamber at different distances from the anode wire and I want to collect all the data from drift.GetEndPoint(xf, yf, zf, tf, status) and save it in a file. I have run it before with distance spacings of 1 cm and it worked fine. Now I tried to rerun the code with a spacing of 1 mm and the program keeps taking too long and gets killed. I use a gas file that covers energies from 100 V/cm to 500000 V/cm in 20 steps with a logarithmic spacing because I have electric potentials of -10000 V to 2000 V. I have tried different gas, mesh and potential files to see if it is maybe too much to load into garfield but nothing changed. This is my code:

#include <cstdlib>

#include <iostream>

#include <fstream>

#include <sys/stat.h>

#include <sys/types.h>

#include <TApplication.h>

#include <TCanvas.h>

#include <TH1F.h>

#include "Garfield/ComponentComsol.hh"

#include "Garfield/ViewField.hh"

#include "Garfield/ViewFEMesh.hh"

#include "Garfield/MediumMagboltz.hh"

#include "Garfield/Sensor.hh"

#include "Garfield/AvalancheMicroscopic.hh"

#include "Garfield/AvalancheMC.hh"

#include "Garfield/Random.hh"

#include "Garfield/DriftLineRKF.hh"

#include "Garfield/TrackHeed.hh"

using namespace Garfield;

int main(int argc, char * argv[]) {

TApplication app("app", &argc, argv);

// Load the field map.

ComponentComsol fm;

fm.Initialise("mesh.mphtxt", "dielectrics.dat", "field.txt", "mm");

fm.PrintRange();

// Setup the gas.

MediumMagboltz gas;

gas.LoadGasFile("ar_82_co2_18_293K_1bar_500000.gas");

// Load the ion mobilities.

const std::string path = std::getenv("GARFIELD_INSTALL");

gas.LoadIonMobility(path + "/share/Garfield/Data/IonMobility_Ar+_Ar.txt");

// Associate the gas with the corresponding field map material.

fm.SetGas(&gas);

fm.PrintMaterials();

// Create the sensor.

Sensor sensor;

sensor.AddComponent(&fm);

//sensor.SetArea(-25.2, 0, -0.5, 0, 0.1, 0.5);

// Set up Heed.

TrackHeed track;

track.SetParticle("muon");

track.SetEnergy(3.e9);

track.SetSensor(&sensor);

// RKF integration.

DriftLineRKF drift(&sensor);

//drift.SetGainFluctuationsPolya(0., 20000.);

// Create a new directory

const std::string outputDir = "electron_positions_mu_3GeV_293K_1mm_test/";

mkdir(outputDir.c_str(), 0777);

for (double x0 = -0.1; x0 >= -25.2; x0 -= 0.1) {

std::ofstream outFile(outputDir + "electron_positions_" + std::to_string(x0) + ".txt");

if (!outFile) {

std::cerr << "Fehler beim Erstellen der Datei!" << std::endl;

return 1;

}

// Write the header

outFile << "x y z E t x_e y_e z_e t_e\n";

constexpr unsigned int nEvents = 100;

for (unsigned int i = 0; i < nEvents; ++i) {

std::cout << i << "/" << nEvents << "\n";

sensor.ClearSignal();

track.NewTrack(x0, 0.05, 0.496, 0, 0, 0, -1);

for (const auto& cluster : track.GetClusters()) {

for (const auto& electron : cluster.electrons) {

double xe = electron.x;

double ye = electron.y;

double ze = electron.z;

double ee = electron.e;

double te = electron.t;

drift.DriftElectron(electron.x, electron.y, electron.z, electron.t);

double xf = 0., yf = 0., zf = 0., tf = 0.;

int status = 0;

drift.GetEndPoint(xf, yf, zf, tf, status);

// Write the data to the file

outFile << xe << " " << ye << " " << ze << " " << ee << " " << te << " " << xf << " " << yf << " " << zf << " " << tf << "\n";

}

}

}

outFile.close();

}

app.Run(kTRUE);

}

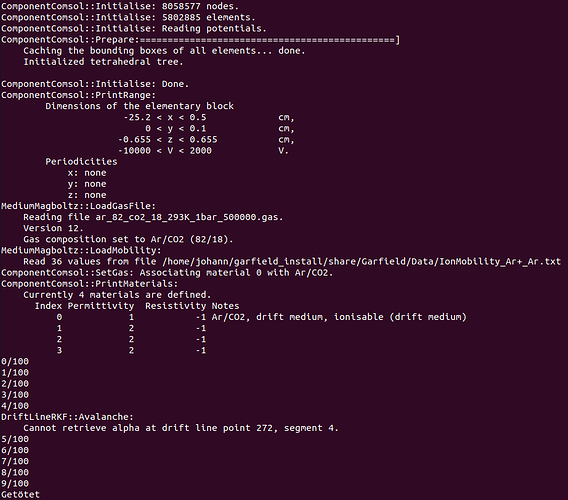

And this is the terminal output:

Do you have any idea what could be the reason for the program to get stopped after a specific time? I would appreciate your help, thanks!