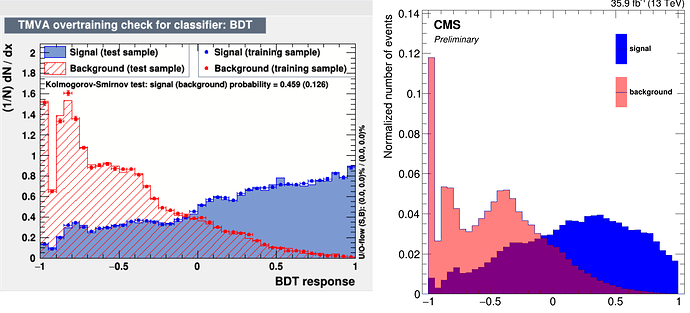

Using the TMVAGUI plotting macro I’ve generated the following response plot (left)

If I manually recreate this plot however, I get the following (right)

I’ve checked the weights and as far as I know they are the same for both, I also keep in mind that I ignore negative weights during training.

With the same plotting code however I’ve also plotted some of the input variables to compare these to the plots created by the TMVA macro. These seem pretty much the same.

In the .root output file of TMVA there is a trainingtree with the input variables in branches and an already existing branch with the BDT response. Using the input variable branches I’ve plotted the response with the same plotting code and compared this to the already existing BDT response branch. In this case they are exactly identical.

I have no idea what’s going wrong and why I cannot reproduce the same response plot.

During training I get the error “The error rate in the BDT boosting is > 0.5. (0.511565) That should not happen, please check your code (i.e… the BDT code), I stop boosting here” and I am not sure how to get rid of it but I also don’t think it should influence my result like this.

I’m using ROOT 6.12/07

Thanks for your reply.

I’ve tried various different ways of treating the weights to get the plot on the right in my original post, in each case (weights = 1, ignore negative weights, use weights as is) there are still significant differences.

I’ve added a branch isSignal (0 for bg, 1 for sig) to my trees and taken a look at the training and testing tree outputs of TMVA. In these trees (located in “dataset_NAME/TrainTree” and “dataset_NAME/TestTree”) there’s a BDT discriminant value already calculated for each event. I’ve taken all events from the training and testing tree, isolated the signal and plotted the discriminant value.

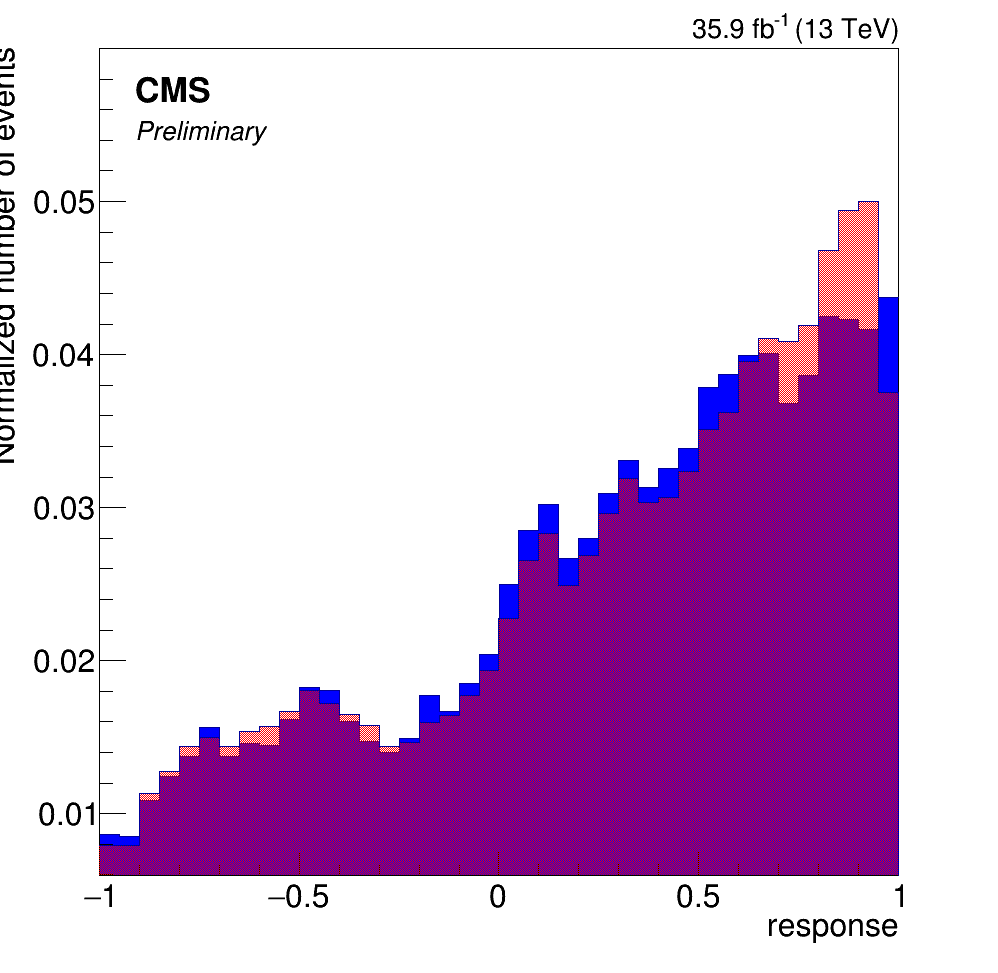

Then I took the histogram from the TMVA output “dataset_NAME/Method_BDT/BDT/MVA_BDT_S” and plotted it on the same canvas.

That gives this figure (the first one is red, the second one blue):

I expected these to be identical, and it’s also clear that this is different from the plot on the right in my original post. I’m not sure why the tree changes after going through TMVA. There are also differences between the eventNb in my input tree vs the TMVA output

What exactly do you mean with mismatched weights? I’m going to take a look at specifically which event numbers are different between the two trees and check the values of variables and weights in those events.

Aditionally, do you have any idea what the cause is of the training error?

Hi,

If there is a difference in event numbers in your 2 trees then it means you operate on different data and you would expect different output. The event numbers found in the train tree corresponds to the ones TMVA saw during training.

MVA_BDT_S contains data from the test tree. MVA_BDT_Train_S contains data from the training tree. If you use data from both trees to construct your histogram it will not match either of the two histograms exactly.

Furthermore, you can verify that you and TMVA agree on what is signal by checking the classID branch of the tree. (It is not a given that 1 corresponds to your signal class, it depends on the order of definition during training, but if you do not find a mismatch, then you agree  )

)

We can forget the mismatched weights for now, this was mainly a concern if we use the TMVA::Reader to generate the output (then one must make sure that TMVA and oneself agrees on the weights as well).

For the error, that might be related to negative weights still being used (can’t say more with out knowing more about the setup). If it is not about the negative weights, then I don’t know. Maybe @moneta could provide some input?

EDIT: This post previously talked about histogram normalisation. That was a red herring it turned out

Cheers,

Kim

Thanks again for the reply.

The eventNb problem was cause by a bug.

I think I have found the cause of the problem.

One of the variables given to TMVA to train the BDT is the number of jets which is an integer. In my input tree this is also defined as an integer and I give this to the loader specifically as an integer.

However, in the training/testing trees, this variable is suddenly a float. In the reader it is impossible to define a variable as integer (I get an error saying this is deprecated)

Evaluating the discriminant for two identical events where in one case njets is a float and in the other it is an integer results in very different values. I’m not sure why this happened but I at least know what is happening now.

Any ideas how to solve this?

[EDIT]: I’ve solved it.

I was confused since I gave the branch to the reader as a float (and similarly for the loader)

for branch in list_of_discrete_variables:

branches[branch] = array('f', [0.])

inputTree.SetBranchAddress(branch, branches[branch])

reader.AddVariable(branch, branches[branch])

But the trick was to explicitly set the local variable to be equal to the variable of the current event, and forcing it to be a float:

for i in range(inputTree.GetEntries()):

inputTree.GetEntry(i)

###

for branch in list_of_discrete_variables:

branches[branch][0] = float(getattr(inputTree, branch))

###

discriminantValue = reader.EvaluateMVA("BDT")

Still no idea how to solve the error rate error though…

Glad that you solved your problem! Please mark your post as the solution to help others that might come after you

(The error rate issue might warrant a separate topic)

Cheers,

Kim

)

)