Hi,

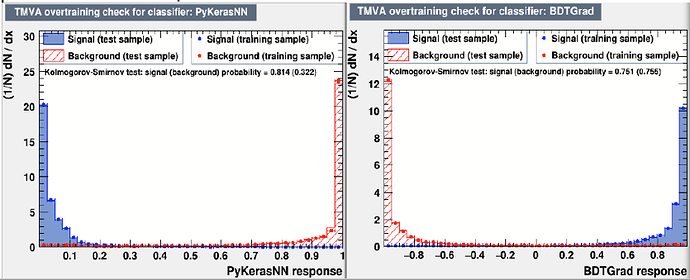

After a while away from running TMVA I am back looking at the new DNN MVA in ROOT 6.10.02. I have noticed what appears to me slightly odd behavior, which is in some of my trainings the target response (1 or 0) for signal or background are inverted. By which I mean signal(background) is trained to give 0(1), instead of the expected 1(0).

In the end I think I have tracked this down to the fact I use the following logic to fill my training and testing samples.

for ( 'some loop over data entries' ) {

if ( target )

{

if ( !useForTesting )

{

tmvaLoader->AddSignalTrainingEvent( InputDoubles, 1.0 );

}

else

{

tmvaLoader->AddSignalTestEvent ( InputDoubles, 1.0 );

}

}

else

{

if ( !useForTesting )

{

tmvaLoader->AddBackgroundTrainingEvent( InputDoubles, 1.0 );

}

else

{

tmvaLoader->AddBackgroundTestEvent ( InputDoubles, 1.0 );

}

}

}

where ‘target’ is a boolean that indicates if the data entry is signal or background, and ‘useForTesting’ another boolean to indicate if the entry should be used for training or testing. InputDoubles is an array with all the input parameters for the given data entry.

tmvaLoader is an instance of TMVA::DataLoader.

The issues is, the order that the above calls are first made is not always the same. It depends on the conditionals, and if the first data entry is declared to be signal or not. What I have found is if the first entry is signal, so ‘AddSignalTrainingEvent’ is called first, then TMVA trains the network so give signal the expected response of 1, and background 0. However, if the first data entry is background, so AddBackgroundTrainingEvent is called first, then the logic is for some reason inverted, and signal is trained to give a response of 0…

Note I have used the above logic many times in the past, with previous ROOT versions (using the MLP classifier). So this issue is new to the new ROOT version (6.10.02).

It is also the case that the use of TMVA::DataLoader is also new. So I am not clear if the issue is related to this, or the use of the DNN classifier.

I have a work around, which is just to make sure AddSignalTrainingEvent is called first (I skip entries until I get to the first training signal entry) and this seems to do the job. However, I am curious as to what people think about the above behavior. I doubt somehow its intentional so looks to me like a bug somewhere in TMVA, either in TMVA::DataLoader or perhaps specific to the DNN MVA ?

cheers Chris