I’m back again with an issue regarding how RDFs are optimising processing and their resource usage.

You might recall from this thread that I have a skimming code which uses RDFs to filter and clean up large n-tuples using RDFs.

In this code, I simply loop over some trees in the file then use DefineSlot to add some columns, then filter() to apply some cuts. The main loop of the code is simply:

for (unsigned int i=0;i<skimmedTreenames.size();i++) {

TChain *tree = new TChain(TStringIt(skimmedTreenames[i]));

int chained = tree->Add(TStringIt(inpaths[f]).Data());

if(!tree->GetEntries()){delete tree; continue;}

log(LOG_INFO) << "Sklimming the following tree: " << skimmedTreenames[i] << '\n';

//info is different in particleLevel tree

bool isparticleLevel = false;

if (skimmedTreenames[i] == "particleLevel") isparticleLevel = true;

/*

multi-threaded event loops. More details for a MT safe cluster submission here:

https://root.cern/doc/master/classROOT_1_1RDataFrame.html#parallel-execution

HTCondor CPUs request here: https://batchdocs.web.cern.ch/local/submit.html#resources-and-limits

*/

// ROOT::EnableImplicitMT();

ROOT::RDataFrame df(*tree);

ROOT::RDF::RNode d = df;

ROOT::RDF::RSnapshotOptions opts;

opts.fMode = "UPDATE";

// Add dummy variable slots

d = AddDummySlots(d, isMC, isSyst);

// Add the MC reconstructed objects (jets, leptons,...)

d = AddObjectSlots(d, isMC);

// Filter the dataframe to pre-selection

d = d.Filter(std::get<1>(presel), std::get<2>(presel), std::get<0>(presel).c_str());

// Add the variables that should be kept for any NTuple (Data, MC, Nominal & Syst)

d = AddVariablesForAll(d);

// Add nominal-only or syst-only variables

d = isSyst ? AddVariablesForSyst(d, CaliMaps) : AddVariablesForNominal(d);

// Add MC only or data only variables

d = isMC ? AddVariablesForMC(d): AddVariablesForData(d);

/* Add MC Metadata ... */

d = isMC ? AddMCMetadata(d, sw_totals): d;

// Defining progress bar

d.Count().OnPartialResult(/*every */100/* events*/, [&log](auto c) { log(LOG_INFO) << c << " events processed\n"; });

log(LOG_INFO) << "Snapshotting the file... " << '\n';

CleanUpAndSave(d, skimmedTreenames[i], outpaths[f], opts);

delete tree;

}

log(LOG_INFO) << " outfile -->>> : " << outpaths[f] << '\n';

// End timer and print time elapsed

log.time_since_last_snap();

log(LOG_INFO) << '\n';

}

log(LOG_INFO) << "DONE :)" << '\n';

Where the only JIT code I have is in Snapshot() used in CleanUpAndSave() function.

Due to the large sizes of the N-tuples we are processing, I am trying to run this on the GRID, which is where we spotted issues with the resource usage of the code.

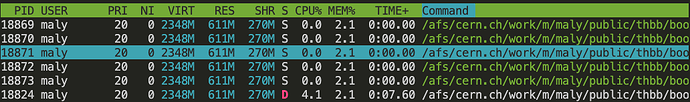

Although the code is not hoarding much memory when executed locally, it seems like a large number of threads (5-15) are running every time I try to run the executable. I am not so sure why this happens, but seeing as on the GRID I ask for 1-core, this leads to realy overwhelming the CPU (with cpu efficiency reaching 1800% for a 400MB ROOT file).

I am trying to understand how I can avoid this, and if there is something I am doing wrong in the way I am setting up this code that I can improve to make sure the code is more stable. Do you have any suggestions?

This is with ROOT v6.28/00.