Dear experts,

Apologies in advance if I garble the ROOT jargon. I am trying to run some Python code which makes some plots from a few TB of root files. Essentially, the code creates an RDataFrame from a Chain, and then iterates over all the events in the RDataFrame, makes plots, etc.

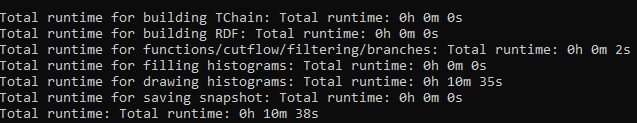

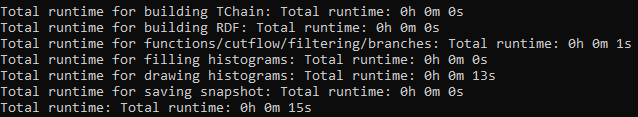

I’ve been trying to test some MultiThreading implementation (via the line ROOT.ROOT.EnableImplicitMT(n)) with this framework and have noticed some peculiar and wildly inconsistent runtime behavior between runs (even identical ones with the same “n” in the MT settings), although I’m not sure if it’s related to the details of ROOT’s EnableImplicit MT functionality or if it’s something server-side which is limiting performance.

Is there a nice way of outputting some sort of detailed log information when ROOT is building the chain, making the RDF, iterating over things in the RDataFrame, etc., like presenting runtime, number of threads, or other info during the run? So far, I have the following lines, although these are probably decently outdated, and they don’t really provide much information to go off of:

verbosity = ROOT.Experimental.RLogScopedVerbosity(ROOT.Detail.RDF.RDFLogChannel(), ROOT.Experimental.ELogLevel.kInfo),

ROOT.RDF.Experimental.AddProgressBar(self.rdf)

Is there anything else you might suggest? Basically, we just want to debug where ROOT is spending time exactly with a given run, and if possible, what exactly it’s choosing with respect to the “n” in the EnableImplicitMT line (if I understand correctly, I know this parameter is more of a “hint” or so for ROOT to best choose how to parallelize?).

Thanks for your help!

Philip Meltzer

ROOT Version: 6.32.02

Platform: Linux

Compiler: GCC