I’m reading Hadron Data into an RDataFrame. I left the office last week for the holidays and came back 2 days ago. Neither my Code nor the Data nor the Root version has changed but I’m seeing unphysical results which my code did not produce before.

Our experiment measures the LorentzVector of Photons scattered from a target. I’m combining pairs of photons to reconstruct the mother particles mass. As a minimal example:

using namespace ROOT;

void check_photon_data() {

RDataFrame df("t", "data/kaons.root");

auto calculate_photon_pair_mass = [](const ROOT::RVec<TVector3>& Ps, const ROOT::RVec<double> Es) -> ROOT::RVec<double> {

//Calculates the invariant mass of each photon pair in hit

//Later the hits with only etas can be counted.

ROOT::RVec<double> masses;

for (size_t i = 0; i < Es.size(); ++i) {

for (size_t j = i + 1; j < Es.size(); ++j) {

TLorentzVector p4_1, p4_2;

p4_1.SetVect(Ps[i]);

p4_1.SetE(Es[i]);

p4_2.SetVect(Ps[j]);

p4_2.SetE(Es[j]);

masses.push_back((p4_1 + p4_2).M());

}

}

return masses;

};

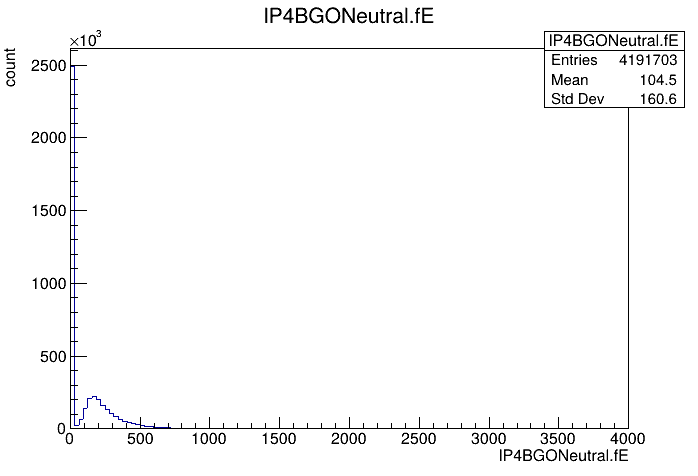

auto df_out = df.Define("mass", calculate_photon_pair_mass, {"lP4BGONeutral.fP", "lP4BGONeutral.fE"})

.Define("p2",

[](const RVec<TVector3>& Ps){

RVec<double> p2;

for (TVector3 p:Ps) {

p2.push_back(p*p);

}

return p2;

},

{"lP4BGONeutral.fP"})

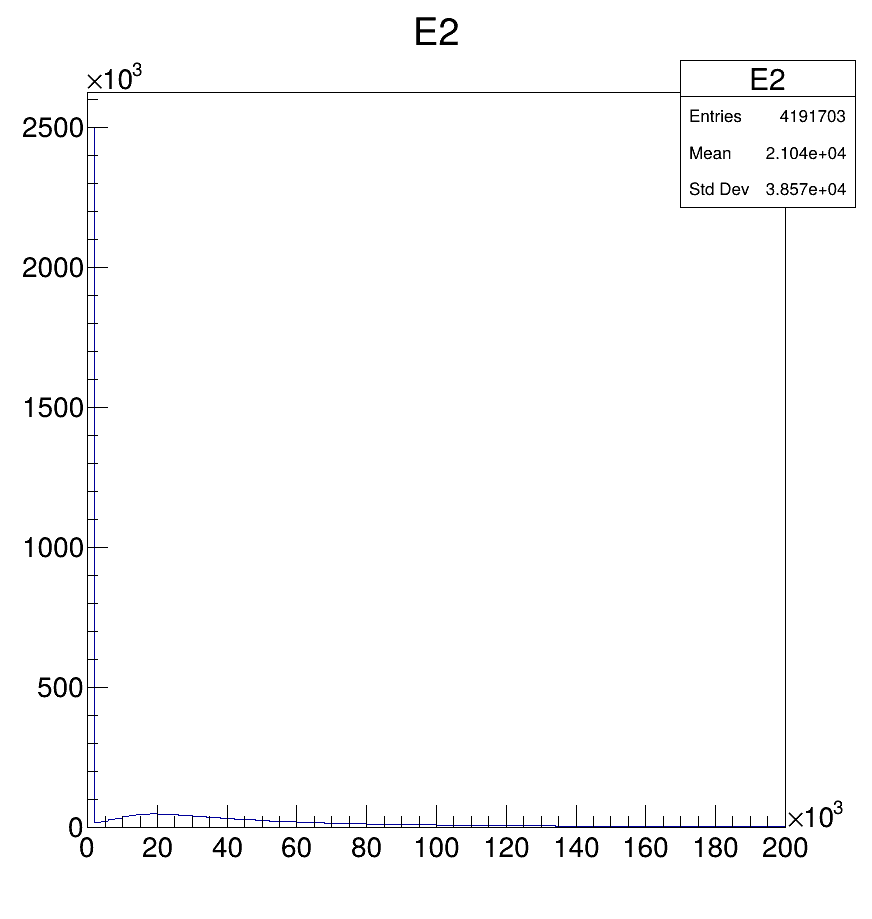

.Define("E2",

[](const RVec<double>& Es) {

RVec<double> E2;

for (double E:Es) {

E2.push_back(E*E);

}

return E2;

},

{"lP4BGONeutral.fE"})

.Define("nPhotonsP", [](const RVec<TVector3>& Ps){return Ps.size();}, {"lP4BGONeutral.fP"})

.Define("nPhotonsE", [](const RVec<double>& Es){return Es.size();}, {"lP4BGONeutral.fE"})

;

df_out.Display({"mass", "E2", "p2", "nPhotonsP", "nPhotonsE"}) -> Print();

}

The print statement shows the following:

+-----+-------------+---------------+---------------+-----------+-----------+

| Row | mass | E2 | p2 | nPhotonsP | nPhotonsE |

+-----+-------------+---------------+---------------+-----------+-----------+

| 0 | 0.000001 | 14082.835480 | 14082.835480 | 3 | 3 |

| | -150.167792 | 0.000000 | 0.000000 | | |

| | -143.386273 | 0.000000 | 20559.623230 | | |

+-----+-------------+---------------+---------------+-----------+-----------+

| 1 | 0.000000 | 570013.964222 | 570013.964222 | 3 | 3 |

| | -443.282662 | 0.000000 | 0.000000 | | |

| | -170.116999 | 0.000000 | 28939.793313 | | |

+-----+-------------+---------------+---------------+-----------+-----------+

| 2 | -0.000008 | 264146.546406 | 264146.546406 | 2 | 2 |

| | | 0.000000 | 0.000000 | | |

+-----+-------------+---------------+---------------+-----------+-----------+

| 3 | 0.000000 | 13026.406976 | 13026.406976 | 2 | 2 |

| | | 0.000000 | 0.000000 | | |

+-----+-------------+---------------+---------------+-----------+-----------+

| 4 | 0.000004 | 112994.245538 | 112994.245538 | 4 | 4 |

| | -389.224240 | 0.000000 | 0.000000 | | |

| | -198.731312 | 0.000000 | 55772.203213 | | |

| | -236.161392 | 0.000000 | 11281.660409 | | |

| | -106.215161 | | | | |

| | -206.536273 | | | | |

+-----+-------------+---------------+---------------+-----------+-----------+

The first photon is about what I would expect, but each following photon is completely unphysical.

I’m also seeing the following error, which was not thrown before:

Warning in <RTreeColumnReader::Get>: Branch lP4BGONeutral.fP hangs from a non-split branch. A copy is being performed in order to properly read the content.

The invariant mass should never return a negative number, regardless of the particle inputs.

I have tried:

- Reverting to an older code version

- Checking the difference in the bytes between a backup of the data

- Reinstalling Root

- Checking myself into a psych ward to increase my medications

According to git, neither the data nor the code has changed. Reinstalling root did actually help with some errors reading the trees when messing around in the console (something about non matching templates, unfortunately I cannot find the actual error message in the log), but did not help with this problem. The conclusion I have from my tests is, that all of my data corrupted in exactly the same way on all backups. This seems unlikely to me.

I also checked to match my event to our incoming photon beam. Each hit has at least one incoming photon, but where there is more than one photon noted the second one also always has an energy of 0MeV:

+-----+-------------+---------------+---------------+--------------+

| Row | mass | E2 | p2 | lP4Tagger.fE |

+-----+-------------+---------------+---------------+--------------+

| 14 | 0.000003 | 41852.464343 | 41852.464343 | 1088.988867 |

| | | 0.000000 | 0.000000 | 0.000000 |

+-----+-------------+---------------+---------------+--------------+

| 24 | 0.000000 | 16769.912434 | 16769.912434 | 616.491890 |

| | -211.097166 | 0.000000 | 0.000000 | 0.000000 |

| | 81.903904 | 0.000000 | 20215.899362 | |

| | -142.182627 | 0.000000 | 3593.200360 | |

| | -59.943310 | | | |

| | -82.606515 | | | |

+-----+-------------+---------------+---------------+--------------+

| 39 | 0.000000 | 59959.183065 | 59959.183065 | 1447.773865 |

| | 123.132588 | 0.000000 | 0.000000 | 0.000000 |

| | -128.081809 | 0.000000 | 18806.866288 | |

| | -137.138128 | 0.000000 | 7728.967910 | |

| | -87.914549 | | | |

| | -184.485314 | | | |

+-----+-------------+---------------+---------------+--------------+

| 47 | -0.000004 | 70022.036036 | 70022.036036 | 2441.479398 |

| | | 0.000000 | 0.000000 | 0.000000 |

+-----+-------------+---------------+---------------+--------------+

From our beam we expect about every 50th to 100th event to contain more than 1 photon in the tagger. I believe that my code fails to properly read the event arrays, but I have no idea how to diagnose this.

This is the relevant tree structure:

******************************************************************************

*Tree :t : t *

*Entries : 7853 : Total = 4376375 bytes File Size = 1826662 *

* : : Tree compression factor = 2.39 *

******************************************************************************

*Br 0 :fs_p : fs_p/D *

*Entries : 7853 : Total Size= 63449 bytes File Size = 59302 *

*Baskets : 2 : Basket Size= 32000 bytes Compression= 1.06 *

*............................................................................*

*Br 1 :fs_beta : fs_beta/D *

*Entries : 7853 : Total Size= 63467 bytes File Size = 58612 *

*Baskets : 2 : Basket Size= 32000 bytes Compression= 1.07 *

*............................................................................*

*Br 2 :lP4Tagger : Int_t lP4Tagger_ *

*Entries : 7853 : Total Size= 66528 bytes File Size = 12080 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 5.22 *

*............................................................................*

*Br 3 :lP4Tagger.fUniqueID : UInt_t fUniqueID[lP4Tagger_] *

*Entries : 7853 : Total Size= 66085 bytes File Size = 10289 *

*Baskets : 4 : Basket Size= 32000 bytes Compression= 6.36 *

*............................................................................*

*Br 4 :lP4Tagger.fBits : UInt_t fBits[lP4Tagger_] *

*Entries : 7853 : Total Size= 66053 bytes File Size = 13920 *

*Baskets : 4 : Basket Size= 32000 bytes Compression= 4.70 *

*............................................................................*

*Br 5 :lP4Tagger.fP : TVector3 fP[lP4Tagger_] *

*Entries : 7853 : Total Size= 369985 bytes File Size = 61959 *

*Baskets : 13 : Basket Size= 32000 bytes Compression= 5.96 *

*............................................................................*

*Br 6 :lP4Tagger.fE : Double_t fE[lP4Tagger_] *

*Entries : 7853 : Total Size= 99793 bytes File Size = 38813 *

*Baskets : 5 : Basket Size= 32000 bytes Compression= 2.56 *

*............................................................................*

*Br 7 :lP4BGONeutral : Int_t lP4BGONeutral_ *

*Entries : 7853 : Total Size= 66928 bytes File Size = 15620 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 4.04 *

*............................................................................*

*Br 8 :lP4BGONeutral.fUniqueID : UInt_t fUniqueID[lP4BGONeutral_] *

*Entries : 7853 : Total Size= 112560 bytes File Size = 13859 *

*Baskets : 5 : Basket Size= 32000 bytes Compression= 8.07 *

*............................................................................*

*Br 9 :lP4BGONeutral.fBits : UInt_t fBits[lP4BGONeutral_] *

*Entries : 7853 : Total Size= 112524 bytes File Size = 19713 *

*Baskets : 5 : Basket Size= 32000 bytes Compression= 5.68 *

*............................................................................*

*Br 10 :lP4BGONeutral.fP : TVector3 fP[lP4BGONeutral_] *

*Entries : 7853 : Total Size= 835081 bytes File Size = 515802 *

*Baskets : 28 : Basket Size= 32000 bytes Compression= 1.62 *

*............................................................................*

*Br 11 :lP4BGONeutral.fE : Double_t fE[lP4BGONeutral_] *

*Entries : 7853 : Total Size= 192693 bytes File Size = 167687 *

*Baskets : 7 : Basket Size= 32000 bytes Compression= 1.15 *

*............................................................................*

*Br 12 :lP4BGOProton : Int_t lP4BGOProton_ *

*Entries : 7853 : Total Size= 66523 bytes File Size = 15420 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 4.09 *

*............................................................................*

*Br 13 :lP4BGOProton.fUniqueID : UInt_t fUniqueID[lP4BGOProton_] *

*Entries : 7853 : Total Size= 46269 bytes File Size = 6438 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 7.09 *

*............................................................................*

*Br 14 :lP4BGOProton.fBits : UInt_t fBits[lP4BGOProton_] *

*Entries : 7853 : Total Size= 46241 bytes File Size = 7597 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 6.00 *

*............................................................................*

*Br 15 :lP4BGOProton.fP : TVector3 fP[lP4BGOProton_] *

*Entries : 7853 : Total Size= 171920 bytes File Size = 96094 *

*Baskets : 7 : Basket Size= 32000 bytes Compression= 1.78 *

*............................................................................*

*Br 16 :lP4BGOProton.fE : Double_t fE[lP4BGOProton_] *

*Entries : 7853 : Total Size= 60148 bytes File Size = 33948 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 1.75 *

*............................................................................*

*Br 17 :lP4BGOPion : Int_t lP4BGOPion_ *

*Entries : 7853 : Total Size= 66473 bytes File Size = 15434 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 4.09 *

*............................................................................*

*Br 18 :lP4BGOPion.fUniqueID : UInt_t fUniqueID[lP4BGOPion_] *

*Entries : 7853 : Total Size= 46251 bytes File Size = 6406 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 7.12 *

*............................................................................*

*Br 19 :lP4BGOPion.fBits : UInt_t fBits[lP4BGOPion_] *

*Entries : 7853 : Total Size= 46223 bytes File Size = 7564 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 6.03 *

*............................................................................*

*Br 20 :lP4BGOPion.fP : TVector3 fP[lP4BGOPion_] *

*Entries : 7853 : Total Size= 171894 bytes File Size = 96129 *

*Baskets : 7 : Basket Size= 32000 bytes Compression= 1.78 *

*............................................................................*

*Br 21 :lP4BGOPion.fE : Double_t fE[lP4BGOPion_] *

*Entries : 7853 : Total Size= 60130 bytes File Size = 34336 *

*Baskets : 3 : Basket Size= 32000 bytes Compression= 1.73 *

*............................................................................*

Edit: The problem persists with new data

Please read tips for efficient and successful posting and posting code

ROOT Version: ROOT 6.36.000

Platform: Arch Linux

Compiler: GCC