I’ve noticed some odd behavior using TH1F or TH1D (I suspect I must be doing something wrong, but I don’t know what).

Here is the issue;

I have some raw ADC data, 4096 channels.

I make a TH1F with 4096 bins from 0-4096 and fill it.

I get a the expected histogram.

I scale my raw data (event by event) by 0.19845.

I make a new root histogram with 4096 channels for 0-4096*0.19845 and refill the histogram.

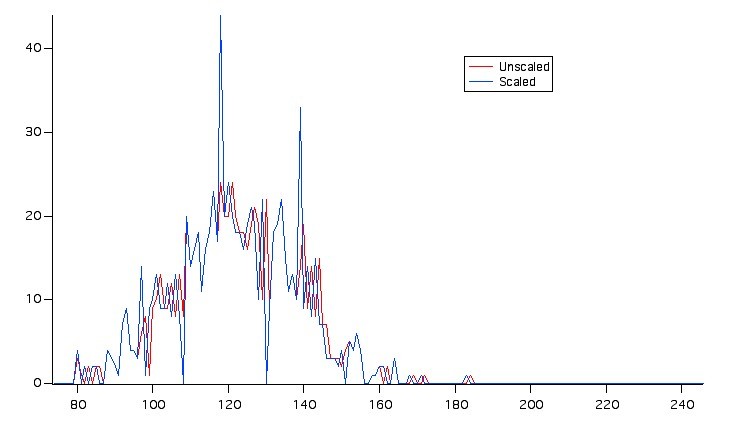

I don’t get the same histogram… there seems to be odd aliasing effects going on.

Weird.

The following represents what I am doing more succinctly (though I haven’t done precisely this),

TH1F *unscaled = new TH1F( “unscaled”, “hist1”, 4096, 0, 4096 );

TH1F scaled = new TH1F( “scaled”, “hist2”, 4096, 0, 40960.19845 );

Then in the loop you have something like the lines,

unscaled->Fill(value);

scaled->Fill(value*0.19845);

I’d think that unscaled and scaled would then have the same shapes. But they don’t… for some scaling factors the scaled hist is shifted, for others there are bins that are combined or split. I have attached an example to clarify this…

Thanks for any help you can give,

-pieter