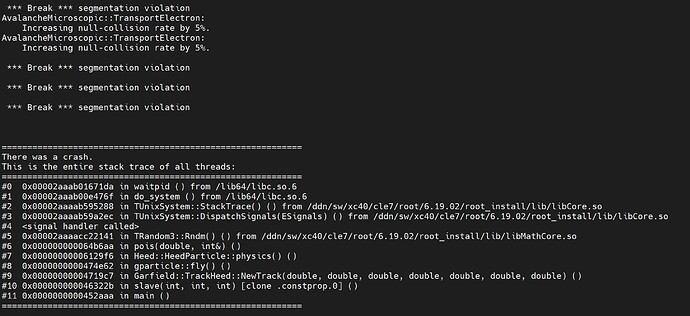

Hi I was writing a program to simulate multiple particle tracks avalanches by running each track in parallel on its own process. When doing so, I end up getting a segmentation fault whenever I try to initialize a new track. I know an alternative way to do this process is to call track->GetCluster() and GetElectron, and then store the outputs to pass back into a parallel version of an avalanche, but I was wondering if there was anyway that I could parallelize the whole thing. I attached the errors below.

Hi,

it’s definitely possible but it would need a bit of rewriting in TrackHeed. At the moment, calling GetCluster triggers the simulation of atomic relaxation and delta electron transport for the next collision along the track.To parallelise things, this would need to be done for all clusters on a track within NewTrack.

So what you are saying is that with GetCluster, I must make it calculate all clusters within that track at one time instead of calculating each time it is called?

Right now I am just trying to run the Track portion, (GetCluster and GetElectron) on one process with MPI, and then using a parallel version of Garfield to simulate the electron avalanche.

Ok so I got NewTrack() to work with MPI when only running on one process. My previous error was because I had to remake the heed library where it would use MPI’s random number engine and not roots.

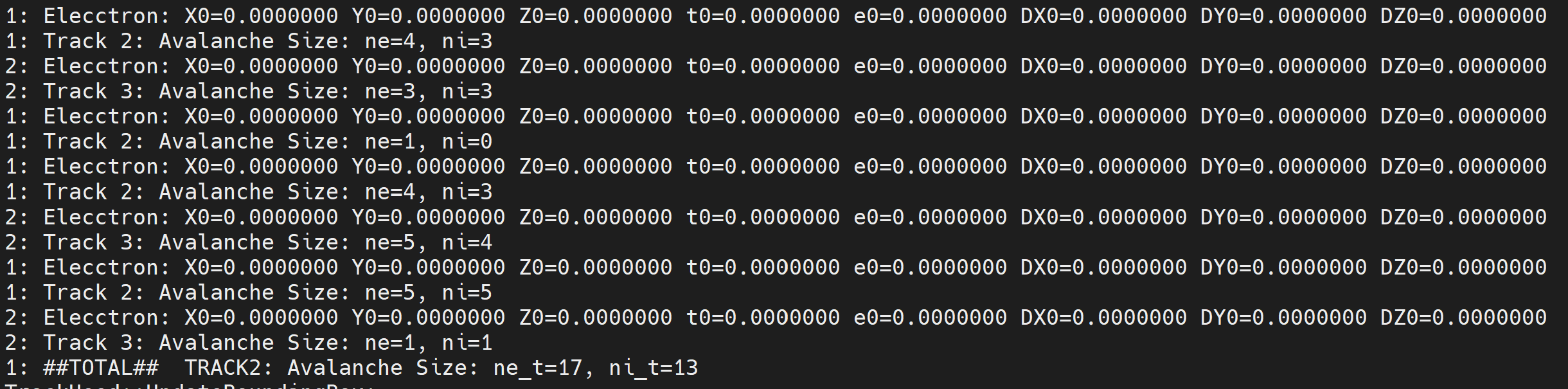

I was wondering if you had an explanation for why for the first couple of times you call NewTrack() after initializing everything, that all the electrons GetElectron() found will have 0 for all of there variables. I was simulating 1000 tracks on 4 processes and about the first 10’s electrons were only filled up with 0’s

My program makes each process calculate the information I need for a single track, and then is sent another one to compute. Here is my code if you would like to view it. Look at the slave() and work_done() functions

gem2B.C (19.6 KB)

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.