Hi,

I am having again this problem:

this time in code that looks like:

import ROOT

import warnings

warnings.filterwarnings( action='ignore', category=FutureWarning, message='Instantiating a function template.*' )

#--------------------

def test(replica):

expr="v_trg_wgt_sta[{}]".format(replica)

repl=expr.replace("[", "_").replace("]", "_")

dff=df.Define(repl, expr)

d_var=dff.AsNumpy([repl])

#--------------------

df=ROOT.RDataFrame("tree", "file.root")

for i in range(0, 10):

test(i)

This problem kept my jobs getting killed for days. As you mentioned, we could do:

import ROOT

import warnings

warnings.filterwarnings( action='ignore', category=FutureWarning, message='Instantiating a function template.*' )

#--------------------

def test(replica):

expr="v_trg_wgt_sta[{}]".format(replica)

repl=expr.replace("[", "_").replace("]", "_")

d_var=df.AsNumpy([repl])

#--------------------

def define(replica):

global df

expr="v_trg_wgt_sta[{}]".format(replica)

repl=expr.replace("[", "_").replace("]", "_")

df=df.Define(repl, expr)

#--------------------

df=ROOT.RDataFrame("tree", "file.root")

for i in range(0, 600):

define(i)

for i in range(0, 10):

test(i)

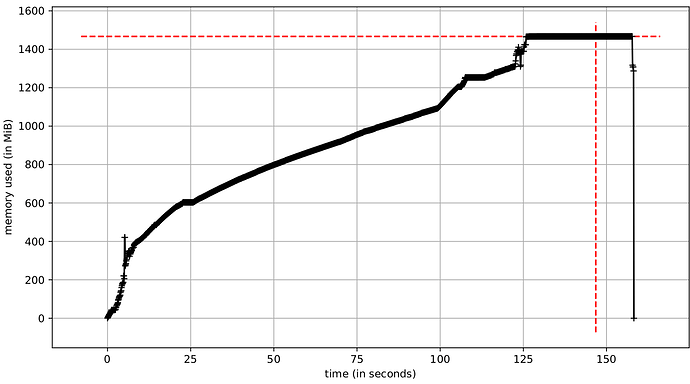

So that whatever needs to be allocated by “RDataFrame::Define” gets allocated once. This seems to lower the amount of memory used to 1.5Gb.

However:

- The dataframe is needed by many functions, not just “test”. These functions might need to read a different part of the file or do different things with the data. So I would strongly prefer to have one instance of it, rather than making it multiple times.

- I need to have a booking function everytime I use RDataFrame, i.e. the second approach, which is less simple than the first one.

- Everything that gets booked occupies space throughout the process, even if it’s used once only.

- 1.5 Gb is not a small amount, for memory that we won’t get back til the end of the process. I mean, we might need several files to be processed, which might need several dataframes, that’s a lot of wasted memory.

- The garbage collector does not deallocate that memory.

My use case is pretty standard, from the scripts, I am not doing anything exotic.

Question: Is there a way to go around this or should I go back to using TFile and TTree?

The type of answer I expect is:

“You can deallocate the object with df.Free(repl) after every call.”

But I assume, we cannot do such a thing. Feel free to modify the scripts with a realistic and workable approach.

Cheers.

Please read tips for efficient and successful posting and posting code

ROOT Version: 6.20

_Platform: x86_64-centos7

_Compiler: gcc9-dbg