Hello,

I wanted to try out the new distributed processing with Dask on Swan as described in https://swan.docs.cern.ch/condor/configure_session/ (apparently I’m not allowed to put it as a link ![]() ), on my admittedly somewhat unusual case of 40 MHz scouting.

), on my admittedly somewhat unusual case of 40 MHz scouting.

My input files have O(100M) events each, and while the standard RDataframe seems to be capable to process them, the distributed setup crashes with out-of-memory errors, even if I try with a single file.

So, e.g. for a regular DF on a single file of about 315M entries

import ROOT

ROOT.EnableImplicitMT()

d1 = ROOT.RDataFrame("Events", 'root://eoscms.cern.ch/eos/cms/store/cmst3/group/l1tr/gpetrucc/l1scout/2024/zerobias_run382250/v0/l1nano_job1.root')

# The file is is accessed via xrootd on eoscms.cern.ch, but the security features of this form don't allow me to write it that way path because new users aren't allowed to post links....

I can do

d1.Filter("Sum(L1Mu_pt>18) >= 1").Count().GetValue()

and get the answer, 62339, in about 1 minute which is not bad considering swan has only 4 cores and so it’s running at 1MHz/core.

However if i try to open a dask RDataframe

DRDataFrame = ROOT.RDF.Experimental.Distributed.Dask.RDataFrame

dd1 = DRDataFrame("Events", '/eos/cms/store/cmst3/group/l1tr/gpetrucc/l1scout/2024/zerobias_run382250/v0/l1nano_job1.root')

dd1.Filter("Sum(L1Mu_pt>18) >= 1").Count().GetValue()

then Swan starts reporting memory errors in the form

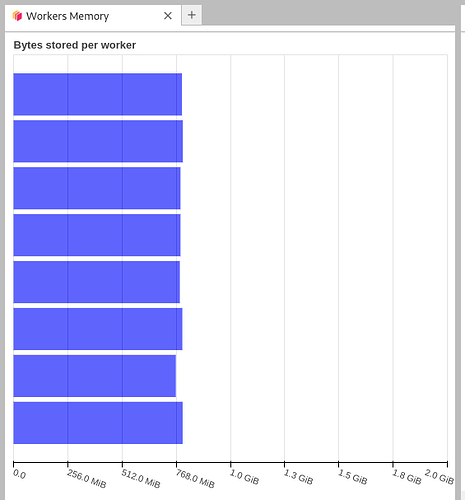

2024-06-27 14:00:16,433 - distributed.nanny.memory - WARNING - Worker tcp://127.0.0.1:36501 (pid=11453) exceeded 95% memory budget. Restarting...

2024-06-27 14:00:16,624 - distributed.nanny.memory - WARNING - Worker tcp://127.0.0.1:44505 (pid=11521) exceeded 95% memory budget. Restarting...

2024-06-27 14:00:16,634 - distributed.nanny.memory - WARNING - Worker tcp://127.0.0.1:38437 (pid=11474) exceeded 95% memory budget. Restarting...

2024-06-27 14:00:16,726 - distributed.nanny.memory - WARNING - Worker tcp://127.0.0.1:34547 (pid=11497) exceeded 95% memory budget. Restarting...

Any idea?

Thanks,

Giovanni