Dear All,

I want to use TMVA to do cross validation with DNN method.

The code I used is attached as a file.

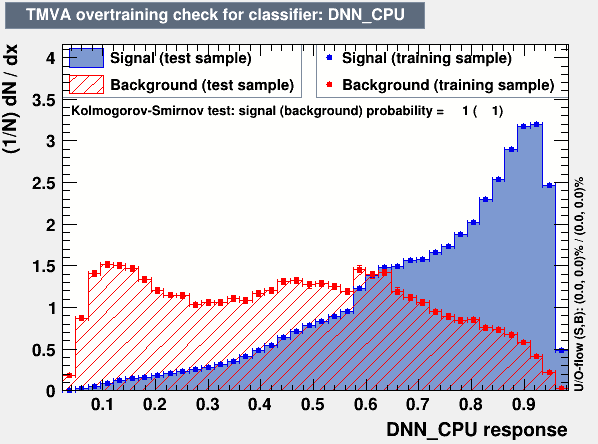

The code runs, but strangely, the p-value is 1 in the Kolmogorov-Smirnov test.

As I understand, if the Kolmogorov-Smirnov test p-value is 1, it is wrong because the same data was entered in the train and test.

The code below is the suspicious part.

I wanted to use 4 folds alternately for train and test, and do validation on the last 5th fold.

So I set numFolds to 4.

And I divided the total events by 5 and used that value as the test value.

Why does the Kolmogorov-Smirnov test p-value come out as 1?

How can I solve it?

Any help would be greatly appreciated.

Best Regards,

Younghoon.

UInt_t numFolds = 4;

UInt_t nSignalEvents = 432851;

UInt_t nBackgroundEvents = 269441;

UInt_t partition_nSignalEvents = UInt_t(Double_t(nSignalEvents) / Double_t(numFolds+1));

UInt_t partition_nBackgroundEvents = UInt_t(Double_t(nBackgroundEvents) / Double_t(numFolds+1));

TString TS_partition_nSignalEvents = TString::Format("%u", partition_nSignalEvents);

TString TS_partition_nBackgroundEvents = TString::Format("%u", partition_nBackgroundEvents);

TString nTest_Signal = "nTest_Signal=" + TS_partition_nSignalEvents;

TString nTest_Background = "nTest_Background=" + TS_partition_nBackgroundEvents;

TString PrepareTrainingAndTestTree_Option = nTest_Signal + ":" + nTest_Background + ":SplitMode=Random" + ":NormMode=NumEvents" + ":!V";

dataloader->PrepareTrainingAndTestTree(mycuts, mycutb, PrepareTrainingAndTestTree_Option);

TString analysisType = "Classification";

TString splitType = (useRandomSplitting) ? "Random" : "Deterministic";

TString splitExpr = (!useRandomSplitting) ? "int(fabs([eventID]))%int([NumFolds])" : "";

TString cvOptions = Form("!V"

":!Silent"

":ModelPersistence"

":AnalysisType=%s"

":SplitType=%s"

":NumFolds=%i"

":SplitExpr=%s",

analysisType.Data(), splitType.Data(), numFolds,

splitExpr.Data());

TMVA::CrossValidation cv{"TMVACrossValidation", dataloader, outputFile, cvOptions};

if (Use["DNN_CPU"] or Use["DNN_GPU"]) {

TString layoutString ("Layout=RELU|256,RELU|256,TANH|256,RELU|256,RELU|256,LINEAR");

TString trainingStrategyString = ("TrainingStrategy=LearningRate=1e-3,Momentum=0.9,"

"ConvergenceSteps=20,BatchSize=512,TestRepetitions=1,"

"WeightDecay=1e-4,Regularization=L2,"

"DropConfig=0.0+0.25+0.25+0.25+0.25+0.25");

TString dnnOptions ("!H:V:ErrorStrategy=CROSSENTROPY:VarTransform=Norm:"

"WeightInitialization=XAVIERUNIFORM");

dnnOptions.Append (":"); dnnOptions.Append (layoutString);

dnnOptions.Append (":"); dnnOptions.Append (trainingStrategyString);

if (Use["DNN_GPU"]) {

TString gpuOptions = dnnOptions + ":Architecture=GPU";

cv.BookMethod(TMVA::Types::kDL, "DNN_GPU", gpuOptions);

}

if (Use["DNN_CPU"]) {

TString cpuOptions = dnnOptions + ":Architecture=CPU";

cv.BookMethod(TMVA::Types::kDL, "DNN_CPU", cpuOptions);

}

}

cv.Evaluate();