Hi Rooters,

I have a question related with the Neural Network.

I learned my Network with the MC sample (signal and background),

according to root example (mlpHiggs.C.) in tutorials directory.

My network looks like this:

TMultiLayerPerceptron *mlp =

new TMultiLayerPerceptron("@distance,@invmass:30:20:type",

simu,“Entry$%2”,"(Entry$+1)%2");

mlp->SetLearningMethod(TMultiLayerPerceptron::kFletcherReeves);

mlp->Train(20, “text,graph,update=10”);

mlp->Export(“test_all”,“C++”);

mlp->SetLearningMethod(TMultiLayerPerceptron::kBFGS);

mlp->Train(40, “text,graph,update=10,+”);

mlp->Export(“test_all”,“C++”);

So I have only two input variables, and loading sample of events

from simu tree and learn the network.

Then I export everything to the C++ code.

And I am very satisfied with the results, because it was possible to perform very nice separation between signal and my hardest background (which wasn’t possible to do in the normal analysis  ).

).

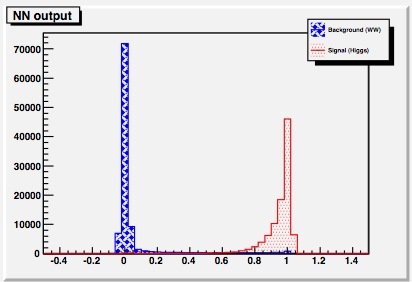

In attached file : LEARN.jpg you can see beautiful separation.

Until now great  .

.

But when I want to use my exported and learned network in the main analysis program,

with my bigger MC background stream sample:

Loop()

.

.

.

.

test_all instance_all;

Double_t NN_out_total =

instance_all.Value(0,invmass_mu,Distance_function) ;

NN_out_before->Fill(NN_out_total);

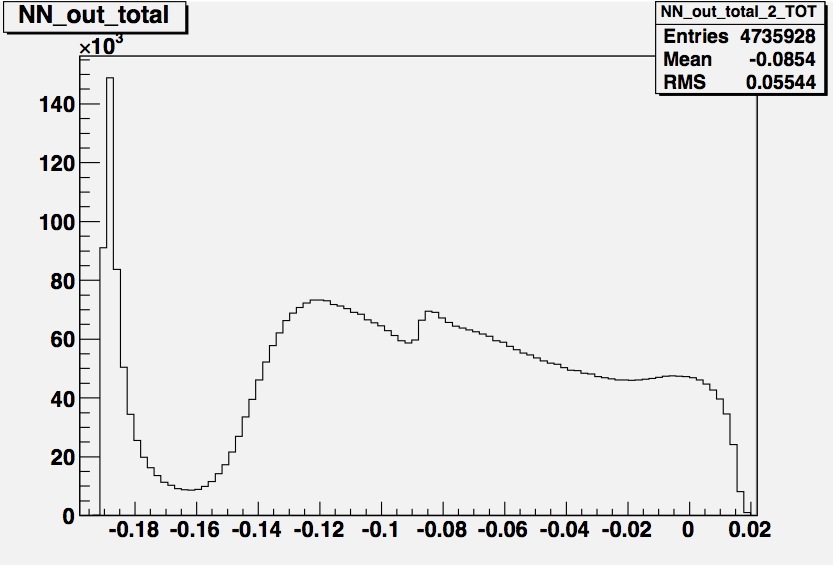

I don’t see ( attached file: RUN.jpg ) a clear peak for the background sample.

(This is the same background sample which I used to learn network but with 40 times bigger statistic). So network should know the characteristic of these events.

I use the NN the first time in my life so I realize that maybe I am doing some

obvious bad things.

Thank you very much for any suggestion.

P.S I saw that on the forum was discussion before about similar problem:

root.cern.ch/phpBB2/viewtopic.ph … al+network

but post is from 2007 year and I use root version 5.24 which is quite new.