Hi,

my problem is very simple when I add a tree to the dataloader the weight parameters does nothing.

In other words this:

TTree * tree = (TTree*)file ->Get("tree");

dataloader->AddSignalTree( tree1 );

dataloader->AddBackgroundTree( tree1 );

TCut mycuts = "some_signal_cuts" ;

TCut mycutb = "some_background_cuts";

dataloader->PrepareTrainingAndTestTree( mycuts, mycutb, "SplitMode=Random:V");

is completely identical to this:

TTree * tree = (TTree*)file ->Get("tree");

dataloader->AddSignalTree( tree1, some_number );

dataloader->AddBackgroundTree( tree1, some_other_number );

TCut mycuts = "some_signal_cuts" ;

TCut mycutb = "some_background_cuts";

dataloader->PrepareTrainingAndTestTree( mycuts, mycutb, "SplitMode=Random:V");

This is how I define factory and dataloader.

std::string factoryOptions( "!V:!Silent:Color:DrawProgressBar:AnalysisType=Classification" );

TMVA::Factory *factory = new TMVA::Factory( "TMVAClassification", outputFile, factoryOptions );

TMVA::DataLoader *dataloader=new TMVA::DataLoader("dataset");

This force me to manually select the number of signal events and background events with nTrain_Signal and the other similar options, or with SigToBkgFraction (available only for BDTs). This is very tedious for various reasons.

My feeling is that the problem is that I load the same events both in the background tree and in the signal one, and then later I define signal and background with cuts.

Any help is welcome,

Alberto

1 Like

This sounds weird to me. There should be a variable weight in your Training and Test trees in the generated root file. These should have differing values if your tree weights are different.

I’ll look into it as soon as I have some time.

A tedious workaround would be to generate and prepare the data set externally with pre-multiplied weights.

Cheers,

Kim

I cannot reproduce your problem. The appended file should mimic your situation, please let me know if this is not the case.

Running with

root -l -q MinimalClassification.C

and

rootbrowse out.root

and checking both the Train and Test trees, these have the correct weight distribution. Please do note that the weights need not be the same for both trees due to renormalisation of the training data.

This leads me to believe the issue lies outside of TMVA. Sorry I cannot give you a more conclusive answer.

Cheers,

Kim

MinimalClassification.C (1.3 KB)

Hi Kim,

I checked the Train and Test trees. The test tree has the correct weights, but the train tree has always the background weight set such has the number of effective bkg events is equal to the sgn events.

In other words the train tree always behave as if something SigToBkgFraction is set to one. In this way the weights with which I add the trees at the dataloader are completely meaningless and the training is not influenced by them.

The strange things is that (to my knowledge) SigToBkgFraction is an option for the BDT method, but I’m seeing this behavior in other methods (for example MLP).

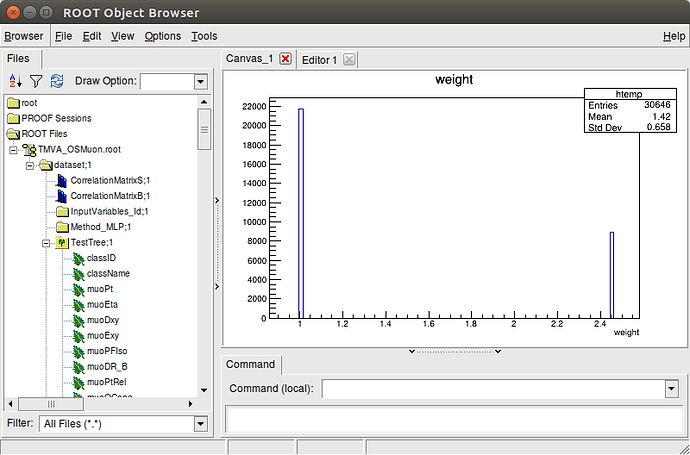

Here you can find an output file of a trained MLP with signal waight equal to 1 and background weight equal to 5.

You can clearly see that the bkg has a weight around 2.5:

Cheers,

Alberto

1 Like

Hi,

Try adding the following option to your dataloader options. This removes the initial normalisation step when the data set is created.

dataloader->PrepareTrainingAndTestTree("...:NormMode=None");

The option NormMode controls pre-method normalisation. That is, normalisation of data done before any methods and transformamtions.

There are 3 choices for this option None, NumEvents, EqualNumEvents with the last being the default. NumEvents ensures that the average weight for each class, independently, is 1. EqualNumEvents ensures that, for all classes taken together is 1.

Cheers,

Kim

1 Like

Thank you Kim, that works.

Cheers,

Alberto

1 Like

Please mark the post as the solution to help others with similar problems

How can I do that? I cannot found any button or option to set a topic as solved

Alberto

There should be a button to the left of the reply button (of the solution post) that says “Solution” when hovered over.