Hello,

Thanks for your reply.

I can run this program in my original environment using the latest version of Garfield++and ROOT 6.36, but I found that the program executes very slowly, and even if I wait for a few hours, I cannot calculate the first cluster. So I wanted to run this program in another old version of the Garfield++environment, and then I encountered the error mentioned earlier.

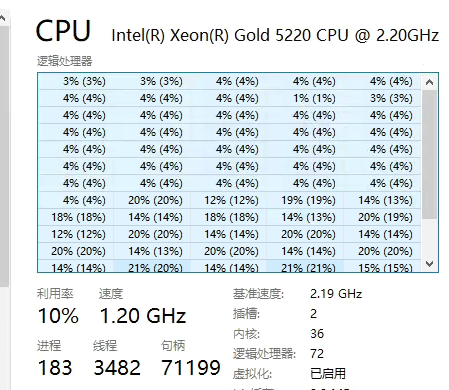

I am using WSL, and I checked the total CPU usage in Windows while I was running the program:

It shows that the total CPU utilization is only 10%. I have allocated resources for my WSL, and my server has 64 logical processors. But I still have a slower running speed compared to others in this discussion.

https://root-forum.cern.ch/t/signal-question/63443/14

The code and parameters I used are consistent with those discussed in the link.

Here is the code and corresponding files. Thank you in advance for your help.

mre.zip (12.8 KB)